Email deliverability is critical to cold email.

Without it, you’re spending thousands of dollars sending email into a black hole where your prospects will never see them.

BUT, email deliverability comes with a dark side – nobody REALLY knows how to measure it (yes, even those “email experts”).

This is a big problem for email marketers, since they have no clue:

what % of their market they’re actually reaching today

what choices in their setup/activities is leading to this baseline

whether their next campaign decision is going to silently tank their deliverability

whether they need to adjust their forecast because they just lost their most effective marketing channel

whether the email deliverability products/services they’re buying for $$$$ are even doing anything of value (“joke’s on you, I fix a problem you can’t measure to the solution to”)

😱😱😱 This is stressful 😱😱😱

After spending 5 years without clear answers from the experts (and $100k+ in spend on their services) we took matters into our own hands… first, we came up with a way of measuring deliverability and second, we tested as many “best practices” and products using this new measure to understand what REALLY works (subject of future blog posts).

In this article, we’ll walk you through:

How to define email deliverability

The different approaches to measuring your deliverability

Our recommended approach to measuring deliverability

What IS Deliverability?

To measure something, you first need to know what “it” is.

To do this, we interviewed many people in our network (GTM teams, agencies, email tool builders) to see how people thought about this.

We found two prevalent definitions…

Definition #1: Bounce Rate

“The ability to not bounce an email”. This was the preferred definition of most vendors in the cold email industry. This made sense — first because it’s an easy to understand metric and second, the bar for this is MUCH lower than most people imagine.

Definition #2: Primary Inbox Placement Rate

“The ability to deliver emails into the prospect’s inbox” – meaning what counts is the ability to have their email be SEEN. This is the preferred definitions of sales and marketing teams, since that’s ultimately what influences the bottom line.

How Do You Measure Deliverability?

Once we knew WHAT we’re measuring, we started thinking about HOW to measure it and figuring out what is feasible.

Several months were sent identifying signals we could use to generate these metrics, and their accuracy. Once we had these signals, we identified the technical challenges with acquiring these signals in a way that would yield actionable conclusions.

Approach #1: Challenges of Measuring Bounce Rate

Secure email servers won’t bounce emails: why? Because bouncing emails gives crucial feedback to spammers that can then be used to send subsequent spam. Because there is no way to know who does this, when, it makes for a weak signal.

Fake bounced emails: secure emails servers will often fake a bounce message as ‘inbox does not exist’. Again, this is a security feature that stops the spammers from failing over to other mechanisms for reaching their targets. How can you tell if a bounce is fake? (we figured this one out, but that’s the subject for a future blog post 😄)

Approach #2: Challenges of Measuring Inbox Placement

No Formal Feedback Loop: there is no protocol that gives senders an indication of what folder their email was classified into on the recipient side.

Different ESP Behaviors: given this, trying to measure inbox placement means integrating and keeping up with many providers – logistically expensive.

Inbox-Level Biases: your prospects’ inboxes do spam classification based on individual history. For example, Gmail will mark emails as Spam because they “look like other emails that were marked as spam” by the user. This means that an approach where you build a ‘private network’ of inboxes to run deliverability tests won’t have the same history as many of your users.

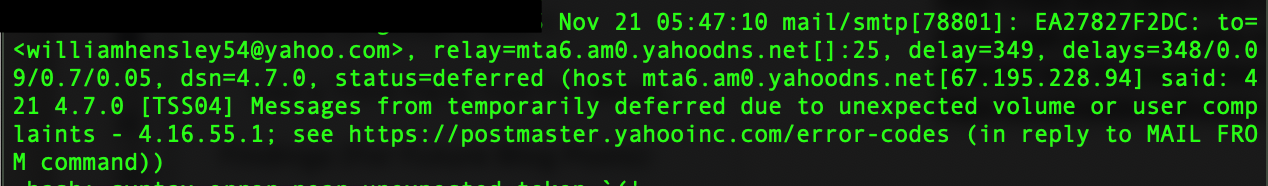

Observation Impacts What You’re Measuring: this is a bit like having a thermometer that changes the temperature of your food. In our testing, we found that many third party tools caused our domain/ip address being blacklisted (as you can see below, a single Glockapps test led one of our domains to be burned with Yahoo). This is problematic because if measuring deliverability is what ends up killing it, then the exercise is pointless.

Glockapps killing our deliverability…

Being a GTM team ourselves, we went with Definition #2 to try to figure out inbox placement. We then made the following implementation decisions to try to create this metric.

Technical Implementation Decisions

1. Focus on Gmail + MSFT:

These make up ~80% of the ESP market overall (more if you’re targeting SMEs), so we decided this would give us the highest ROI on our time.

2. Use a Third Party Email Warmup Tool

We thought it would be better to work with a network of REAL inboxes rather than fake ones to get the most realistic results.

Because we were suspicious of these tools, we did an evaluation to check them all for accuracy. For example, we intentionally dirtied a domain/ip to the point where we were extremely sure that emails sent would end up in spam. We then ran a controlled test where we sent emails both to the warmup network and a trusted group of friends’ business email accounts. We then compared the results to see which email warmup tools came closest to reality (surprisingly there was a huge variance), and chose the one tool that seemed most accurate.

3. Send Emails Through a Custom Email Server

Using a third party warmup tool introduced two problems we had to deal with.

First, there was the black box of their inbox network and sending algorithms (eg: do they use a bot to send emails through Gmail UI, or do they use SMTP? Are they evenly distributing the types of inboxes to which content is sent? What content are they sending? etc.) Therefore, we needed a way to control/understand these variables.

Second, to use these tools we needed to connect an Email Service Provider (ESP). These too were black boxes. For example, Gmail does proactive spam filtering when sending, they send emails from different IPs with different reputations, etc — which makes it hard to run controlled experiments. Also, they hide raw email logs that often have very valuable data – for example during the SMTP exchange servers that block emails will sometimes include error codes that are not included in the delivery message that Gmail sends you (if it sends the error at all).

The best solution we came up with was deploying a custom email server that sat between the warmup tool and the outside world, so we:

Built a custom email server, which then gave us raw log visibility into every email operation that was taking place via the third party tools.

Wrote custom code into the email server itself that allowed us to intercept/override activities of the Inbox Warmer — this allowed us to control the parameters of the experiment(for example, making sure emails were only being sent to known ESPs and in specific patterns).

Methodology:

Note: while the methodology here could be tighter to conform to scientific standards, keep in mind this was a self-funded side project with no clear probability of success, so we did cut some corners – I do however stand by this being more transparent/thorough than any other email deliverability related studies I have found to date.

Equipment:

Domains: we procured two sets of domains (20 total). The first was a brand new pool of domains that we aged 2-3 months. The second was an old pool of domains that we had done a lot of email volume on over the years, but had not been used for 9 months.

IP Addresses: IP addresses were verified as clean before starting any experiments using SpamAssassin, MXToolbox and the major email gateway providers (Proofpoint, Barracuda)

Methodology:

Phase 1:

In this phase, our goal was to get accurate reporting on SPAM vs. INBOX emails. We had to experiment to find a configuration at first that got our emails sent to SPAM intentionally by corrupting our normally healthy setup; the easiest one we found was using a domain that had been registered <7 days ago. We tested the configuration was bad by emailing a network of trusted inboxes and verifying if the email(s) went to SPAM. We then connected the inboxes to our email warmup services.

Phase 2:

Once we picked our email warmup service, we transitioned to a ‘proper’ setup with old domain, correct records, etc. We manually sent emails to our trusted network of inboxes to get a variable result (some in SPAM, some not). Once we had this state, we turned on the email warming network and monitored results to get a baseline.

Phase 3:

We started to run experiments with various ‘levers’ for email deliverability to see if we could get the generated stats to change predictably (eg: if I included certain keywords, would it cause an observable negative change in deliverability?)

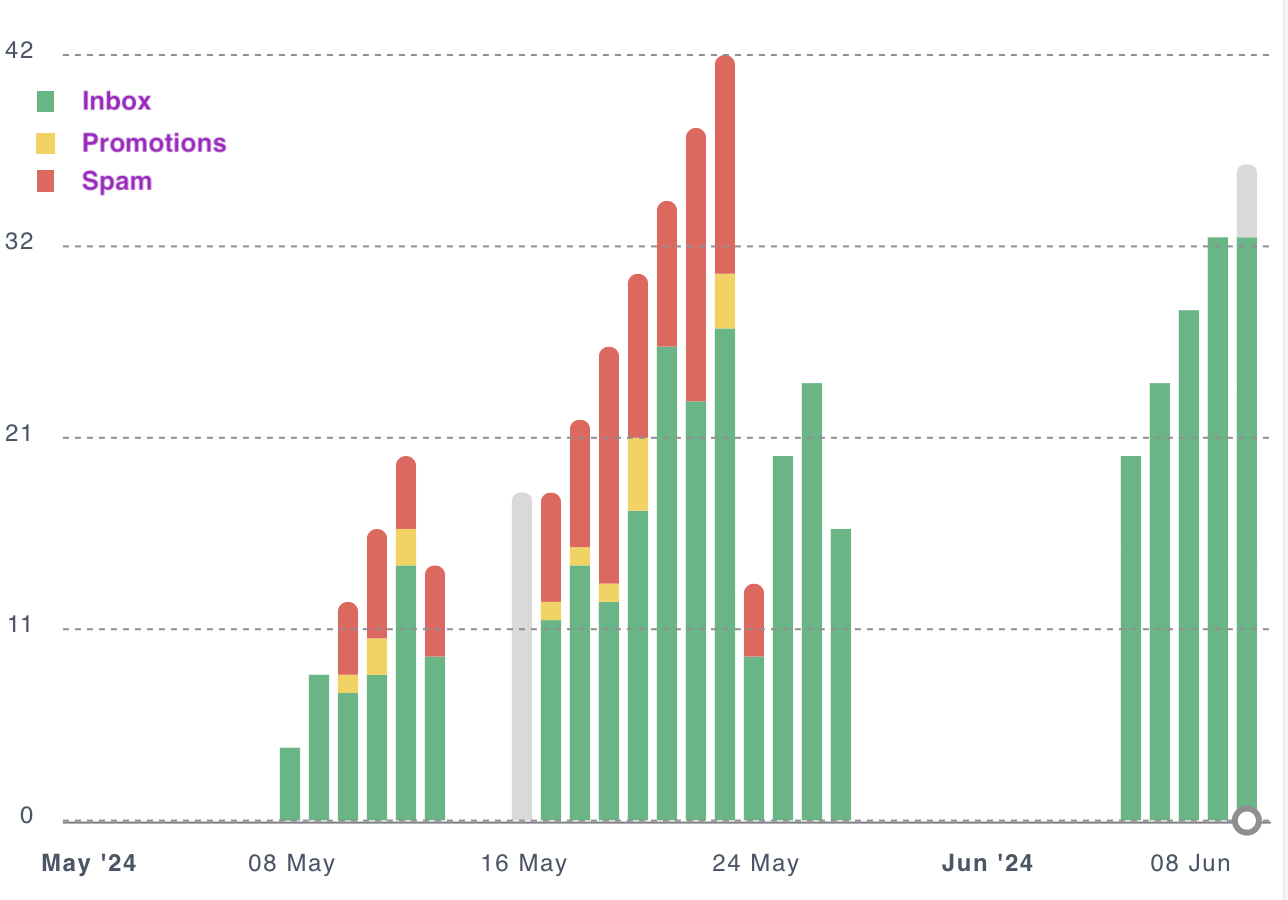

An example of what this looks like can be seen below in the results section.

As an additional check, for each changed lever, we continued to send emails to a pool of 25 trusted users to see if the changes in deliverability were consistent with what our metrics were showing us – thankfully, these were directionally consistent.**

**NOTE: we say ‘directionally consistent’ here because we did not have the resources to send 25 emails for every permutation of setting we changed. While this would have made the results more conclusive/better, we treated more as a litmus test than a key data point that would change our experiment’s conclusions.

Results:

Once we had a system for measuring deliverability, we started to run a series of experiments where we changed parameters like domain age, domain/ip reputation, sending infrastructure, email content, etc. As we made these changes, we saw very clear changes to the deliverability metrics that were being generated, which reaffirmed our confidence that we could use this system to dynamically measure how our cold email behavior is impacting our deliverability.

Here’s ONE example that shows us how the same server/ip/domain can have its messages go from SPAM to the primary inbox by modifying the raw email content with various algorithms — the first half shows inbox placement using the default content of the inbox warmer, the second shows what it looks like with our own custom content optimizations (more of that in a future post)

Conclusions:

From this experience, we reached several conclusions, which led to more experiments (which will be the topic of many future blog posts)

Email deliverability is not impossible to measure. We were able to define our own email delivery metric (inbox placement %) and run many experiments where we saw clear changes in this metric, leading us to better understand what truly impacts deliverability.

Controlling Infrastructure Is Key: delegating sending infrastructure to Gmail/Outlook has many positives and negatives (topic for another post). However, one big negative is the black box of their sending infrastructure. It’s not possible to measure deliverability in such a dynamic environment.

Email deliverability tools can largely not be trusted. We were shocked with just how many untrue metrics some of these email tools were showing (even the volume of emails being sent). Upon raising some of these concerns with the creators of these tools, we realized many of them simply did not understand the foundations of email delivery and that they built many of these features without ever testing them.

Keep It Simple Stupid: throughout this process, we were able to discover a handful of levers that had big impacts on deliverability, and they amounted to very obvious things in retrospect (something we’ll discuss in future posts).

Future Blog Posts

Now that we’ve established how we measure deliverability, we have a TON of studies to share with you on which best practices and cold email products actually work, vs. those that don’t.

If you liked this article, give it a ‘LIKE’ and we’ll convert this to a formal newsletter and keep posting.

Michael Schwanz

Author